- Historic Photos From the NYC Municipal Archives - In Focus - The Atlantic

- Food Fascist | Michael Ruhlman

- P.S. A Column On Things: The death of photojournalism as we have known it

- RAND: Not So Reasonable? - Simon Says... - "When RAND terms are required (be they FRAND, RAND-Z or plain RAND), they indicate a desire to hold in reserve the option of controlling the relationship with the end user, introducing different terms depending on the competitive and market context. This scope for additional restrictions and for variability dependent on the field of use is what makes any form of RAND a red flag to open source developers."

- Welcome - The Data Journalism Handbook

- What We Can Learn From Super-Slow Motion | VSL

- The End of Gaming (Consoles) | Gaming Business Review - "Of all the segments I have ever worked in, the gaming one has the most ‘religious fervor’ – often mixed with a bizarre bigotry. Fanboys rage at each other and the suppliers try hard to play to this audience. Yes, many know there is a bigger audience out there, but it was not the big game makers that produced Farmville, or Angry Birds or even Draw Something. Is this because the big game makers are busy trying to be a film-studio (or even replace film studios)? Maybe."

Monday, April 30, 2012

Links for 04-30-2012

Friday, April 27, 2012

Links for 04-27-2012

- John Grisham Explains Baseball

- Who Made That Pie Chart? - NYTimes.com

- Is GPL licensing in decline? | Open Source Software - InfoWorld

- Midsize Insider: IT Trends: A Vast, Moving Cloud? - "Are Big Data, mobility, and the cloud converging to reshape the IT environment at a fundamental level? Red Hat's "cloud evangelist" says that they are. True, his job is to say things like that. But in fact, these IT trends are hard to miss: They dominate the tech press week after week. They form the backdrop for all the buzz about individual tech firms."

- Daily Research News Online no. 15349 - Gartner Offers $AUD 20m for IDEAS - Missed this news. Gartner acquiring IDEAS (which had acquired DH Brown long time ago)

- State of the Computer Book Market, part 4: The Languages - O'Reilly Radar - "Java continues to be the number one language from book units sold and dollars. There is some shuffling going on with the languages tough. JavaScript is very hot now, as is R. Likely a result of Android and big data driving folks to these languages. "

- Five not-so-obvious reasons why Apple won't be Sony redux | ZDNet - RT @sjvn: RT @dbfarber: Five not-so-obvious reasons why Apple won't be Sony redux @ldignan << Makes sense.

- The Google attack: How I attacked myself using Google Spreadsheets and I ramped up a $1000 bandwidth bill | A Computer Scientist in a Business School - RT @samj: The Google attack: How I attacked myself using Google Spreadsheets and I ramped up a $1000 bandwidth bill ...

- Talent Shortage Looms Over Big Data - WSJ.com

- Open Cloud Conf - RT @pmikeyp: The Open Cloud Conference at the Silicon Valley Cloud Center is next week - << I'll be on panel Tues AM

- The Library of Utopia - Technology Review - "Google's ambitious book-scanning program is foundering in the courts. Now a Harvard-led group is launching its own sweeping effort to put our literary heritage online. Will the Ivy League succeed where Silicon Valley failed?"

- High-stakes one-shot Prisoner's Dilemma on a British game show with an astounding strategy - Boing Boing

- High stakes for open source in the commercial cloud | Open Source Software - InfoWorld

Wednesday, April 25, 2012

Links for 04-25-2012

- What Your Klout Score Really Means | Epicenter | Wired.com - Fun stuff in the comment thread to :-) Some well above-average snark.

- Apple's Tim Cook on Windows 8: It's like mating a fridge with a toaster - Computerworld Blogs - The degree to which tablets and laptops will converge over time is an important question that doesn't have an obvious answer.

- Secure virtualization for tactical environments – Military Embedded Systems

- What If a Highway Ran Through the Infinite Corridor? - An interstate was once proposed to run through Cambridge. vis @MIT_alumni

- Meet Nicira. Yes, people will call it the VMware of networking — Cloud Computing News

- Sony's fall and Japan's hang-ups | Nanotech - The Circuits Blog - CNET News

- Introducing the data journalism handbook | News | guardian.co.uk - "Edited by Liliana Bounegru, Jonathan Gray and Lucy Chambers, the book will be made freely available online under a CC BY-SA license so anyone can read and share it. Additionally a printed version and an e-book will be published by O'Reilly Media. If you want to be notified when the book is released, you can sign up on the website."

Monday, April 23, 2012

Pike Street Market

Pike Street Market

Originally uploaded by ghaff

I had a few hours when I was in Seattle and dropped by Pike Street Market.

I'm interviewed about cloud at Cloud Fair

Gordon talks about what Red Hat has been up to recently in the cloud including Open Stack and Open Shift. Interviewed by Dell's Director of Web Technology Barton George at Cloud Fair 2012 in Seattle, WA. April 18, 2012

Friday, April 20, 2012

Thursday, April 19, 2012

Links for 04-19-2012

- HealthCheck Fedora - Where's the beef? - The H Open Source: News and Features

- What Amazon's ebook strategy means - Charlie's Diary - "Firstly, it's not an accident that Bezos' start-up targeted the book trade. Bookselling in 1994 was a notoriously backward-looking, inefficient, and old-fashioned area of the retail sector. There are structural reasons for this. A bookshop that relies on walk-in customers needs to have a wide range of items in stock because books are not fungible; a copy of the King James Version Bible is not an acceptable substitute for "REAMDE" by Neal Stephenson or "Inside the Puzzle Palace: A History of the NSA" by James Bamford."

- Why Open Source Is the Key to Cloud Innovation

- Why e-books cost so much | Internet & Media - CNET News - "Here's something that tends to get lost in the debate over e-book prices: Paper doesn't cost very much."

- Yes, Copyright's Sole Purpose Is To Benefit The Public | Techdirt

- It’s official: IBM and Red Hat get with OpenStack — Cloud Computing News - RT @SteveTomasco: It’s official: IBM and Red Hat get with OpenStack #ibmcloud #openstack

- SAS 70 / SSAE 16 Issues & How to Fix Them » Data Center Knowledge - "For data centers, the challenge associated with the use of SAS 70 and SSAE 16 is that both standards are focused on internal controls over financial reporting (ICFR) concerns. ICFR is crucial for corporations that must comply with Sarbanes-Oxley requirements. In most cases, however, ICFR is of limited concern for the services data centers provide for customers. With limited reporting options, data centers were somewhat stuck between a rock and a hard place."

- Why You Can’t Get a Taxi - Magazine - The Atlantic - "But just because Uber is good for its passengers and drivers doesn’t mean that it’s good for everyone. Taxi drivers are a powerful political constituency in many cities. And as Robert McNamara noted drily, “Like any other business, taxi drivers think it would be great if no one could compete with them.” In some cities, including San Francisco and Washington, D.C., a regulatory backlash has hit the company hard."

- IBM and the Eames Office: Minds of Modern Mathematics iPad app

Thursday, April 12, 2012

Podcast: I talk open clouds with Chris Wells

My colleague Chris Wells turns the tables on me and interviews me about the characteristics of an open cloud. These include:

- is open source

- has a viable, independent community

- is based on open standards

- gives you the freedom to use IP

- is deployable on the infrastructure of your choice

- is pluggable and extensible with an open API

- enables portability of applications and data to other clouds

Listen to OGG (0:09:09)

[Transcript]

Gordon

Haff: You're listening to the Cloudy Chat Podcast with Gordon Haff.

Chris

Wells: Welcome, everyone. For today's podcast, we're going to do

something a little bit different and turn around. My name is Chris Wells and

I'm a Product Marketing Manager here at Red Hat. Today I'm actually going to

interview Gordon Haff, our cloud evangelist.

Gordon:

Hey. Thanks, Chris.

Chris:

We'll turn the tables here a little bit. I'll ask you some of the

questions. I understand that you've been doing a lot of work, and Red Hat in

particular has been doing a lot of work, around open cloud. Could you just talk

a little bit about what does Red Hat mean when it says "open cloud?"

Gordon:

Sure. Well, the idea of an open cloud is really that you can build a

cloud out of all your IT infrastructure and not just a part of it. Also, there

are a lot of other characteristics that are very important to, really, all the

customers we talk about ‑ the ability to move applications from one cloud to

another, the ability to develop applications once and deploy them anywhere you

want, the ability, really, to be in control of your own roadmap. Obviously, as

an open source company, Red Hat places a lot of value on openness across a number

of different dimensions. I have to say, we've actually been a little bit

surprised maybe about how much this message has resonated with our cloud

customers.

Chris:

Well, when you talked a little bit about the open source piece there, is

it simple enough to say that open cloud equals open source, or is there more to

it than that?

Gordon:

There's a lot more to it than that. Open source is clearly very

important. I think a lot of the aspects of openness around clouds are kind of

hard to imagine how you might get there without open source, but open source by

itself does have a lot of benefits. It lets the users control their own

implementation. It doesn't tie them to a particular vendor. It lets users

collaborate with communities. If they want something that's a bit different, or

maybe they and some other end users want something that's a bit different, they

can go in that direction and don't have to convince some vendor to do it.

Obviously,

part of that is viewing source code and being able to do their own development.

Although that's very important, it doesn't stop there.

Chris:

Where else does it go?

Gordon:

Staying on the open source theme, one of the first things is that open

source isn't just code and license. Not like, "OK, I can see the code.

It's licensed under Apache. Everything's great. Don't need to worry about it

any more." The community that's associated with that open source code is

really important. Really, if it's just open source and it's still just a single

company that's involved in it, that probably doesn't buy an end user an awful

lot, because all the developers are still with that single company. Really

realizing the collaborative potential for open source means that you have a

vibrant community, and that involves things like governance. How do you

contribute code? What are the processes for that kind of thing? Where does

innovation come from?

Also

related to that is open standards. Again, these things are all related to each

other. Again, I'd probably argue truly open standards aren't possible outside

of open source, because then they're always going to be tied to a single vendor

in some way or another.

Standardization,

in the sense of official standards, even isn't necessarily the critical thing

here. These things take a long time to roll out, and cloud computing is such a

new area, but the idea that you have standards, even if they're not fully

standardized yet, is still very important.

Chris:

That's very interesting. Now, you mentioned a little bit earlier about

talking to different types of customers. Do you see as customers have more and

more interest in going to cloud computing, are they more interested in the open

cloud or open source type of approach than they might have been in a

traditional data center?

Gordon:

Yeah, I think so because cloud is really about spanning all this

heterogeneous infrastructure, whether it's public clouds, whether it's

different virtualization platforms, or whether it's physical servers even. I

think a lot of people think cloud is just virtualization or public clouds, but

actually, pretty much everyone we talk to says that they really see for a lot

of workloads, that maybe 20 to 25 percent of the workloads in organizations,

that those are really going to stay in physical servers for the foreseeable

future. There's definitely an interest in moving those workloads to the cloud.

Chris:

Now, there's a lot of vendors in the cloud space besides Red Hat. Red

Hat's obviously taken this approach to really go down the open cloud paradigm

that we talked about because that's pretty consistent with our heritage and our

history around being open source. What do you see as challenges for other

vendors that today aren't open?

Gordon:

The big challenge is that users are demanding openness. And in fact, if

you look at the cloud computing marketing literature out there, not to mention

any names, but you see some very much closed vendors out there who have

"Open" in huge type on their websites. It's usually because they're

trying to frame themselves as being open in some narrow sense. Perhaps they've

contributed their APIs to some standards organization or something. I think

they're going to be challenged when you compare them with a company like Red

Hat, for example, which has a long heritage in open source, knows how to work

with communities, knows how to ‑ really understands the depth of openness that

is required. I think they're going to be challenged to combat that effectively.

Chris:

For our listeners out there in the audience, I think most of them would

agree, because I think you and I have talked to a lot of customers that

definitely want this openness. The question's going to be everyone is trying to

say that they're open. If you're in the customer's shoes today, or our

listener's shoes, what kind of questions can they ask to actually figure out is

something truly open versus someone just saying the word "open?"

Gordon:

I've gone through a few of these already, but let me go down the list of

things that we've come up with that, as we talk to our customers, really resonate

with them as mattering. I've mentioned open source, mentioned the community

associated with that open source, mentioned open standards.

Another

important aspect of openness is freedom to use IP. Now, we don’t have a lot of

time to get into that here but, suffice it to say that, although modern open

source licenses and open standards can mitigate certain aspects of IP

issues—patents, copyrights, and so forth—freedom to use IP is a separate issue

that users ought to be aware of.

Is

deployable in the infrastructure of your choice. This speaks to in cloud [how]

it really can't be just an extension of a particular virtualization platform,

for example. It really needs to be independent of that other layer and

deployable in public, choice of virtualization platforms and physical servers.

The

ability to extend APIs, adding features, can't be under the control of a single

implementation or vendor. That was one reason that something called the

Deltacloud API that Red Hat uses is under the auspices of the Apache Software

Foundation, which is a very well‑regarded, meritocracy‑based governance regime,

so that kind of governs how people can contribute and extend that.

Finally,

just the idea that you have portability to other clouds. You can't have a cloud

that requires that you develop your software in a particular way that's tied to

that particular cloud so that you have to port it if you want to move it

somewhere else. Those are really the main things that we think about when we

think of an open cloud, and that's really resonated with our customers.

Chris:

Well, Gordon, this is some great information that you've shared with our

audience today. I think you've given them some great takeaways of

characteristics they should look for around choosing a vendor around open and

cloud and some key questions to ask. Thank you very much.

Gordon:

Thanks, Chris.

Tuesday, April 10, 2012

Links for 04-10-2012

- iTWire - Why we need the GPL more than ever - The trend towards more permissive licensing really upsets some folks.

- Here is why Facebook bought Instagram — Tech News and Analysis - "Instagram has what Facebook craves – passionate community. People like Facebook. People use Facebook. People love Instagram. It is my single most-used app."

- Red Hat Contributes More to OpenStack than Canonical Ubuntu - InternetNews. - "Canonical also does not show up in the list of top Linux kernel contributors either. While as a company Canonical and its charismatic leadership continue to proclaim how they are moving the open source community forward, the numbers tell of a different story. The numbers show that Canonical's culture of contribution is not in the same league as Red Hat's."

- Who Wrote OpenStack Essex? A Deep Dive Into Contributions - RT @cloudpundit: Who Wrote OpenStack Essex? A Deep Dive Into Contributions by @jzb via @RWW

- Twitter / @RyanProgramming: An animated Cloud Computin ...

- If Oracle wins its Android suit, everyone loses | Open Source Software - InfoWorld - Good history of Java from a legal and competitive environment perspective.

- The Open Source Implications of the CloudStack Announcement – tecosystems - "While everyone wants to predict outcomes on project and API futures, the fact is that it’s too early in most cases to project accurately. "

- Practical Tips from Four Years of Worldwide Travel | Legal Nomads

- Good is my copilot - Boston.com - "Not every drama or near tragedy is a teachable moment. At the risk of sounding too mellow about the whole incident, we should just sit back, admit stuff happens, and recognize that there was a backup plan: the copilot."

- An Animated History of the MBTA [Map] | BostInno - Animated MBTA map through history

- darktable 1.0 released | darktable

- Questions Amazon Should Answer About Its Cloud Strategy - Forbes - RT @jasonbrooks: Questions Amazon Should Answer About Its Cloud Strategy - Forbes << Good questions.

Monday, April 09, 2012

Of open source licenses and open cloud APIs

Last week was active in the cloud world with Citrix sending CloudStack to the Apache Software Foundation and pulling out of the OpenStack project. Of course, there's been much fevered commentary, some smart--and some not so much.

[To be even more explicit than usual, I work as a Cloud Evangelist at Red Hat, which has partnerships, competes with, and/or has other relationships with various companies and projects mentioned. The opinions I express here are mine alone, should not be taken as official Red Hat positions, and are in no way based on non-public information.]

The basic facts about Citrix' April 3 announcement are as follows. As stated in their press release, "Citrix CloudStack 3 will be released today under Apache License 2.0, and the CloudStack.org community will become part of the highly successful Apache Incubator program." CloudStack is an Infrastructure-as-a-Service cloud management product that came into Citrix by way of its acquisition of Cloud.com in 2011. Not stated in the press release, but widely reported, was that Citrix was pulling out of OpenStack, the open source project on which it had previously planned to focus under the codename Project Olympus.

Those are the basics. Now for some observations.

Without overly downplaying this announcement, it highlighted the unfortunate rush in the press, blogs, and twitter to crown winners even in the early stages of a technology trend. Suddenly, OpenStack--which lots of folks had widely promoted as having "won" the cloud race--was being talked about as yesterday's news. Lest we forget, a different platform had been being talked up as the inevitable winner the year before that. Analyst Steven O'Grady at RedMonk has a typically more nuanced view: "While everyone wants to predict outcomes on project and API futures, the fact is that it’s too early in most cases to project accurately."

I have my own views and am obviously a big believer in Red Hat's cloud projects and position. I'm not going to get into those here but just point out that we're in the very early stages of a long and complicated game. I'd also point out that cloud management isn't one size fits all; different products/projects such as Red Hat's CloudForms and OpenStack address different use cases.

Steven among others also observes that the shift of CloudStack into Apache, and the corresponding shift of the code from the GPL license to the more permissive Apache license represents an overall trend:

Red Hat also uses Apache licensing for projects such as the Deltacloud API (which is also governed by the Apache Software Foundation and which recently graduated from Incubator status--where CloudStack is today--to a top level project) and Project Aeolus (one of the main upstream projects for Red Hat CloudForms hybrid cloud management).

Another point about last week's happenings worth a mention is the API discussion. Application Programming Interfaces are the mechanism that lets you communicate with virtualization platforms and cloud providers. Arguably, they don't get enough attention. For example, what incantations do you need to make in order to spin up a machine image on Amazon?

By way of background, the Amazon APIs--at least those for doing relatively lowest-common-denominator tasks that pretty much any IaaS cloud needs to do--have come to be regarded by some as de facto standards. Which is to say, not really standards but things that are omnipresent enough that they can effectively be regarded as such. Formats, such as the specifics of images that run on Amazon are separate but related issue; I won't touch on those further here even though they're at least equally important.

Well, maybe.

One of the key points in the Citrix announcement was that the "proposed Apache CloudStack project will make it easier for customers of all types to deliver cloud services on a platform that is open, powerful, flexible and 'Proven Amazon Compatible.'" In other words, build an AWS-compatible private cloud. We've seen this before with Eucalyptus, which had its own announcement about a supposedly expanded relationship with Amazon a couple of weeks back.

It turns out there's a bit of a wrinkle though. I first got a hint of it from a twitter post by Netflix cloud architect Adrian Cockcroft. Which led me to this post by Gartner's Lydia Leong in which she writes: "With this partnership, Eucalyptus has formally licensed the Amazon API. There’s been a lot of speculation on what this means." As far as anybody knows, Citrix does not have a corresponding license from Amazon.

Why does this matter?

It matters because open APIs are one of the key characteristics of an open cloud. And this should serve as something of a wakeup call. Perhaps, as Lydia suggests in another post:

Over at Forbes, Dan Woods asks:

[To be even more explicit than usual, I work as a Cloud Evangelist at Red Hat, which has partnerships, competes with, and/or has other relationships with various companies and projects mentioned. The opinions I express here are mine alone, should not be taken as official Red Hat positions, and are in no way based on non-public information.]

The basic facts about Citrix' April 3 announcement are as follows. As stated in their press release, "Citrix CloudStack 3 will be released today under Apache License 2.0, and the CloudStack.org community will become part of the highly successful Apache Incubator program." CloudStack is an Infrastructure-as-a-Service cloud management product that came into Citrix by way of its acquisition of Cloud.com in 2011. Not stated in the press release, but widely reported, was that Citrix was pulling out of OpenStack, the open source project on which it had previously planned to focus under the codename Project Olympus.

Those are the basics. Now for some observations.

Without overly downplaying this announcement, it highlighted the unfortunate rush in the press, blogs, and twitter to crown winners even in the early stages of a technology trend. Suddenly, OpenStack--which lots of folks had widely promoted as having "won" the cloud race--was being talked about as yesterday's news. Lest we forget, a different platform had been being talked up as the inevitable winner the year before that. Analyst Steven O'Grady at RedMonk has a typically more nuanced view: "While everyone wants to predict outcomes on project and API futures, the fact is that it’s too early in most cases to project accurately."

I have my own views and am obviously a big believer in Red Hat's cloud projects and position. I'm not going to get into those here but just point out that we're in the very early stages of a long and complicated game. I'd also point out that cloud management isn't one size fits all; different products/projects such as Red Hat's CloudForms and OpenStack address different use cases.

Steven among others also observes that the shift of CloudStack into Apache, and the corresponding shift of the code from the GPL license to the more permissive Apache license represents an overall trend:

Faded to the point that permissive licenses are increasingly seen as a license of choice for maximizing participation and community size. It’s not true that copyleft licenses are unable to form large communities; Linux and MySQL are two of the largest open source communities in existence, and both assets are reciprocally licensed. But the case can be made that this will in future be perceived as anachronistic behavior.I agree with Steven. I wrote about the topic in more detail in a CNET Blog Network post last year. In addition to encouraging participation, as Steven notes, I also speculate that the success of open source as a development and innovation model has made open source projects less leery of protecting their code from freeloaders as the terms of a copyleft license attempts to do. (A copyleft license basically means that if you make changes to the code and distribute those changes in the form of a binary, you need to distribute the source code with the changes also.)

Red Hat also uses Apache licensing for projects such as the Deltacloud API (which is also governed by the Apache Software Foundation and which recently graduated from Incubator status--where CloudStack is today--to a top level project) and Project Aeolus (one of the main upstream projects for Red Hat CloudForms hybrid cloud management).

Another point about last week's happenings worth a mention is the API discussion. Application Programming Interfaces are the mechanism that lets you communicate with virtualization platforms and cloud providers. Arguably, they don't get enough attention. For example, what incantations do you need to make in order to spin up a machine image on Amazon?

By way of background, the Amazon APIs--at least those for doing relatively lowest-common-denominator tasks that pretty much any IaaS cloud needs to do--have come to be regarded by some as de facto standards. Which is to say, not really standards but things that are omnipresent enough that they can effectively be regarded as such. Formats, such as the specifics of images that run on Amazon are separate but related issue; I won't touch on those further here even though they're at least equally important.

Well, maybe.

One of the key points in the Citrix announcement was that the "proposed Apache CloudStack project will make it easier for customers of all types to deliver cloud services on a platform that is open, powerful, flexible and 'Proven Amazon Compatible.'" In other words, build an AWS-compatible private cloud. We've seen this before with Eucalyptus, which had its own announcement about a supposedly expanded relationship with Amazon a couple of weeks back.

It turns out there's a bit of a wrinkle though. I first got a hint of it from a twitter post by Netflix cloud architect Adrian Cockcroft. Which led me to this post by Gartner's Lydia Leong in which she writes: "With this partnership, Eucalyptus has formally licensed the Amazon API. There’s been a lot of speculation on what this means." As far as anybody knows, Citrix does not have a corresponding license from Amazon.

Why does this matter?

It matters because open APIs are one of the key characteristics of an open cloud. And this should serve as something of a wakeup call. Perhaps, as Lydia suggests in another post:

I think it comes down to the following: If Amazon believes that they can innovate faster, drive lower costs, and deliver better service than all of their competitors that are using the same APIs (or, for that matter, enterprises who are using those same APIs), then it is to their advantage to encourage as many ways to “on-ramp” onto those APIs as possible, with the expectation that they will switch onto the superior Amazon platform over time.However, the fact that these APIs can be licensed and that one or more vendors believes there to be business advantage to licensing those APIs should set off at least gentle alarms. At the least, it raises questions about what behaviors Amazon could at least potentially restrict in the absence of an explicit license.

Over at Forbes, Dan Woods asks:

Are there limits to the use of Amazon’s APIs?These and others are good questions to ask. For history has shown time and time again that de facto open is not open. Times change and companies change.

How will community experience inform the evolution of Amazon’s APIs?

What is the process that will govern the evolution of the Amazon APIs?

Thursday, April 05, 2012

Links for 04-05-2012

- Prices Are People: A Short History of Working and Spending Money - Derek Thompson - Business - The Atlantic

- Before the Internet: The golden age of online services | ITworld

- IBM CIO Discusses Big Blue's BYOD Strategy | PCWorld Business Center

- Just how big are porn sites? | ExtremeTech - Staggering numbers.

- Google Art Project

- Angry Birds, Farmville and Other Hyperaddictive ‘Stupid Games’ - NYTimes.com - "And so a tradition was born: a tradition I am going to call (half descriptively, half out of revenge for all the hours I’ve lost to them) “stupid games.” In the nearly 30 years since Tetris’s invention — and especially over the last five, with the rise of smartphones — Tetris and its offspring (Angry Birds, Bejeweled, Fruit Ninja, etc.) have colonized our pockets and our brains and shifted the entire economic model of the video-game industry. Today we are living, for better and worse, in a world of stupid games."

- Can Copyleft Clouds Find Contributors?

- 5 takeaways from the CloudStack-OpenStack dustup — Cloud Computing News - Agree with some. Disagree with some.

Wednesday, April 04, 2012

Links for 04-04-2012

- Ask not what OpenStack can do for you… « Seeing the fnords - "Over the last months I’ve seen more and more tweets and news articles using the formulation “OpenStack should”, as in “OpenStack should support Amazon APIs since it’s the de-facto standard”. I think there is a fundamental misconception there and I’d like to address it."

- Eleven Tips for Successful Cloud Computing Adoption | Cloud Computing Journal - Nice list.

- Wig Wam Bam. « The Loose Couple's Blog - "The deep-rooted politic, hidden agendas and the overall return have made little sense in terms of commercial opportunity and the de facto positioning of “it [feature] will be available in the next release” will not have sat well within the corridors of power. Add that to the recent “insert coin to continue” trend within OpenStack and the dreadful, garish “loophole” in the Apache License (sigh) that almost begs for “embrace and extend but do not return code” will have contributed significantly to the sounding of the death knell. How the latter plays out for Cloudstack will be interesting to observe too."

- The Amazon-Eucalyptus partnership - "With this partnership, Eucalyptus has formally licensed the Amazon API. There’s been a lot of speculation on what this means. My understanding is the following:"

- Freeform Community Research - "So if it’s not Generation Y, who is it that’s pushing to have their own devices connected to the corporate network? As can be seen in Figure 1, outside of the IT department itself, it’s the senior executives who are most insistent on using a personal device for work. "

Tuesday, April 03, 2012

Links for 04-03-2012

- Citrix Breaks Away From OpenStack | Forrester Blogs - "With Eucalyptus staging a revival, cloudstack breaking away and OnApp locking in service provider customers, the window for OpenStack to become the Linux of IaaS is beginning to close. "

- Girls Around Me and the end of Internet innocence | Molly Rants - CNET News - "Thank you, I-Free, for ushering in a tipping point in the conversation about online privacy. You freaked us right the hell out by showing us exactly what's possible with information we're freely sharing online--and maybe it'll finally make us all stop."

- Love Hotels and Unicode | ReignDesign

- Citrix Gives Cloudstack to the Apache Software Foundation and Turns its Back on OpenStack | ServicesANGLE - Ref: Citrix, CloudStack, OpenStack, AWS, etc. << My, but the game is most certainly afoot.

- Connections: Crowdsourcing prediction and other data fun with Oscar predictions - Over weekend crunched 25+ yrs of data from an Oscar contest. Interesting crowdsourced predictions results

- 25 Years of IBM’s OS/2: The Strange Days and Surprising Afterlife of a Legendary Operating System | Techland | TIME.com

- (13) Take a drive through Boston circa 1964

- Hazing, Dartmouth, and Animal House, Part II - Ricochet.com

Monday, April 02, 2012

Crowdsourcing and other data fun with Oscar predictions

And now for something completely different.

By way of background, a classmate of mine from undergrad has been holding Oscar parties for over 25 years. As part of this Oscar party, he's also held a guess-the-winners contest. With between 50 and 100 contest entries annually for most of the period, that's a lot of ballots. And, being Steve, he's carefully saved and organized all that data.

Over the years, we've chatted about various aspects of the results, observed some patterns, and wondered about others. For example, has the widespread availability of Oscar predictions from all sorts of sources on the Internet changed the scores in this contest? (Maybe. I'll get to that.) After the party this year, I decided to look at the historical results a bit more systematically. Steve was kind enough to send me a spreadsheet with the lifetime results and follow up with some additional historical data.

I think you'll find the results interesting.

But, first, let's talk about the data set. The first annual contest was in 1987 and there have been 1,736 ballots over the years with an average of 67 annually; the number of ballots has always been in the double-digits. While the categories on the ballot and some of the scoring details have been tweaked over the years, the maximum score has always been 40 (different categories are worth different numbers of points). There's a cash pool, although that has been made optional in recent years. Votes are generally independent and secret although, of course, there's nothing to keep family members and others from cooperating on their ballots if they choose to do so.

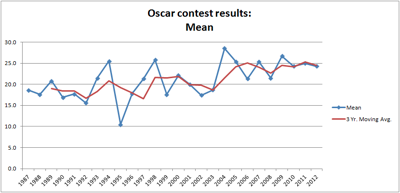

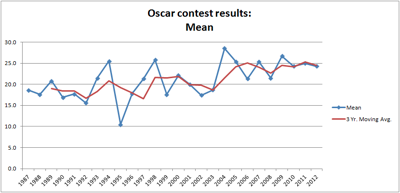

The first thing I looked at was whether there were any trends in the overall results. The first figure shows the mean scores from each year graphed in a time series, as well as a three-year moving average of that data. I'll be mostly sticking to three-year moving averages from hereon out as it seems to do a nice job of smoothing data that is otherwise pretty spiky, making it hard to discern patterns. (Some Oscar years bring more upsets/surprises than others, causing scores to bounce around quite a bit.)

Is there a trend? There does seem to be a slight permanent uptick in the 2000s, which is right where you'd expect there to be an uptick if widespread availability of information on the Internet were a factor. That said, the effect is slight. And running the data through the exponential smoothing function in StatPlus didn't turn up a statistically significant trend for the time series as a whole. (Which represents the sum total of statistical analysis applied to any of this data.) As we'll get to, there are a couple other things that suggest something is a bit different in the 2000s relative to the 1990s, but it's neither a big nor indisputable effect.

Color me a bit surprised on this one. I knew there wasn't going to be a huge effect but I expected to see a clearer indication given how much (supposedly) informed commentary is now widely available on the Internet compared to flipping through your local newspaper or TV Guide in the mid-nineties.

We'll return to the topic of trends but, for now, let's turn to something that's far less ambiguous. And that's the consistent "skill" of Consensus. Who is Consensus? Well, Consensus is a virtual contest entrant who, each year, looks through all the ballots and, for each category, marks its ballot based on the most common choice made by each of the human contest entrants. If 40 people voted for The Artist, 20 for Hugo, and 10 for The Descendants for Best Picture, Consensus would put a virtual tick next to The Artist. (Midnight in Paris deserved to win but I digress.) And so forth for the other categories. Consensus then gets scored just like a human-created ballot.

As you can see, Consensus does not usually win. But it comes close.

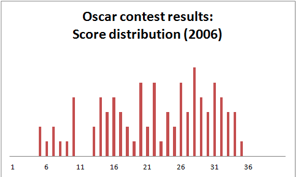

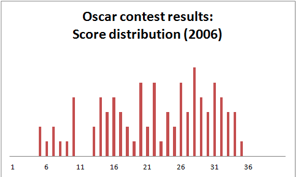

And Consensus consistently beats the mean, which is to say the average total score for all the human-created ballots. Apparently, taking the consensus of individual predictions is more effective than averaging overall results. One reason is that Consensus tends to exclude the effect of individual picks that are, shall we say, "unlikely to win." Whereas ballots seemingly created using a dartboard still get counted in the mean and thereby drive it down. If you look at the histogram for 2006 results, you'll see there are a lot of ballots scattered all over. Consensus tends to minimize the effect of the low-end outliers.

But how good is Consensus as a prediction mechanism compared to more sophisticated alternatives?

We've already seen that it doesn't usually win. While true, this isn't a very interesting observation if we're trying to figure out the best way to make predictions. We can't know a given year's winner ahead of time.

But we can choose experts in various ways. Surely, they can beat a naive Consensus that includes the effects of ballots from small children and others who may get scores down in the single digits.

For the first expert panel, I picked those with the top five highest average scores from among entrants in at least four of the first five contests. I then took the average of those five during each year and penciled it in as the result of the expert panel. It would have been interesting to also see the Consensus of that panel but that would require reworking the original raw data from the ballots themselves. Because of how the process works, my guess that that this would be higher than the panel mean but probably not much higher.

For the second panel, I just took the people with the 25 highest scores from among those who had entered the contest for at least 20 years. This is a bit of a cheat in that, unlike the first panel, it's retrospective--that is, it requires you in 1987 to know who is going to have the best track record by the time 2012 rolls around. However, as it turns out, the two panels post almost exactly the same scores. So, there doesn't seem much point in overly fussing with the panel composition. Whatever I do, even if it involves some prescience, ends up at about the same place.

So now we have a couple of panels of proven experts. How did they do? Not bad.

But they didn't beat Consensus.

To be sure, the trend lines do seem to be getting closer over time. I suspect, apropos the earlier discussion about trends over time, we're seeing that carefully-considered predictions are increasingly informed by the general online wisdom. The result is that Consensus in the contest starts to closely parallel the wisdom of the Internet because that's the source so many people entering the contest use. And those people who do the best in the contest over time? They lean heavily on the same sources of information too. There's increasingly a sort of universal meta-consensus from which no one seriously trying to optimize their score can afford to stray too far.

It's hard to prove any of this though. (And the first few years of the contest are something of an outlier compared to most of the 1990s. While I can imagine various things, no particularly good theory comes to mind.)

Let me just throw out one last morsel of data. Even if we retrospectively pick the most successful contest entrants over time, Consensus still comes out on top. Against a Consensus average of 30.5 over the life of the contest, the best >20 year contestant scored 28.4. If we broaden the population to include those who have entered the contest for at least 5 years, one person scored a 31--but this over the last nine year period when Consensus averaged 33.

In short, it's impossible to even beat Consensus consistently by matching it against a person or persons who we know with the benefit of hindsight to be the very best at predicting the winners. We might improve results by taking the consensus of a subset of contestants with a proven track record. It's possible that experts coming up with answers cooperatively would improve results as well. But even the simplest and uncontrolled Consensus does darn well.

This presentation from Yahoo Research goes into a fair amount of depth about different approaches to crowdsourced predictions as this sort of technique is trendily called these days. It seems to be quite an effective technique for certain types of predictions. When Steve Meretzky, who provided me with this data, and I were in MIT's Lecture Series Committee, the group had a contest each term to guess attendance at our movies. (Despite the name, LSC was and is primarily a film group.) There too, the consensus prediction consistently scored well.

I'd be interested in better understanding when this technique works well and when it doesn't. Presumably, a critical mass of the pool making the prediction needs some insight into the question at hand, whether based on their own personal knowledge or by aggregating information from elsewhere. If everyone in the pool is just guessing randomly, the consensus of those results isn't going to magically add new information. And, of course, there are going to be many situations where data-driven decisions are going to beat human intuition, however it's aggregated.

But we do know that Consensus is extremely effective for at least certain types of prediction. Of which this is a good example.

By way of background, a classmate of mine from undergrad has been holding Oscar parties for over 25 years. As part of this Oscar party, he's also held a guess-the-winners contest. With between 50 and 100 contest entries annually for most of the period, that's a lot of ballots. And, being Steve, he's carefully saved and organized all that data.

Over the years, we've chatted about various aspects of the results, observed some patterns, and wondered about others. For example, has the widespread availability of Oscar predictions from all sorts of sources on the Internet changed the scores in this contest? (Maybe. I'll get to that.) After the party this year, I decided to look at the historical results a bit more systematically. Steve was kind enough to send me a spreadsheet with the lifetime results and follow up with some additional historical data.

I think you'll find the results interesting.

But, first, let's talk about the data set. The first annual contest was in 1987 and there have been 1,736 ballots over the years with an average of 67 annually; the number of ballots has always been in the double-digits. While the categories on the ballot and some of the scoring details have been tweaked over the years, the maximum score has always been 40 (different categories are worth different numbers of points). There's a cash pool, although that has been made optional in recent years. Votes are generally independent and secret although, of course, there's nothing to keep family members and others from cooperating on their ballots if they choose to do so.

The first thing I looked at was whether there were any trends in the overall results. The first figure shows the mean scores from each year graphed in a time series, as well as a three-year moving average of that data. I'll be mostly sticking to three-year moving averages from hereon out as it seems to do a nice job of smoothing data that is otherwise pretty spiky, making it hard to discern patterns. (Some Oscar years bring more upsets/surprises than others, causing scores to bounce around quite a bit.)

Is there a trend? There does seem to be a slight permanent uptick in the 2000s, which is right where you'd expect there to be an uptick if widespread availability of information on the Internet were a factor. That said, the effect is slight. And running the data through the exponential smoothing function in StatPlus didn't turn up a statistically significant trend for the time series as a whole. (Which represents the sum total of statistical analysis applied to any of this data.) As we'll get to, there are a couple other things that suggest something is a bit different in the 2000s relative to the 1990s, but it's neither a big nor indisputable effect.

Color me a bit surprised on this one. I knew there wasn't going to be a huge effect but I expected to see a clearer indication given how much (supposedly) informed commentary is now widely available on the Internet compared to flipping through your local newspaper or TV Guide in the mid-nineties.

We'll return to the topic of trends but, for now, let's turn to something that's far less ambiguous. And that's the consistent "skill" of Consensus. Who is Consensus? Well, Consensus is a virtual contest entrant who, each year, looks through all the ballots and, for each category, marks its ballot based on the most common choice made by each of the human contest entrants. If 40 people voted for The Artist, 20 for Hugo, and 10 for The Descendants for Best Picture, Consensus would put a virtual tick next to The Artist. (Midnight in Paris deserved to win but I digress.) And so forth for the other categories. Consensus then gets scored just like a human-created ballot.

As you can see, Consensus does not usually win. But it comes close.

And Consensus consistently beats the mean, which is to say the average total score for all the human-created ballots. Apparently, taking the consensus of individual predictions is more effective than averaging overall results. One reason is that Consensus tends to exclude the effect of individual picks that are, shall we say, "unlikely to win." Whereas ballots seemingly created using a dartboard still get counted in the mean and thereby drive it down. If you look at the histogram for 2006 results, you'll see there are a lot of ballots scattered all over. Consensus tends to minimize the effect of the low-end outliers.

But how good is Consensus as a prediction mechanism compared to more sophisticated alternatives?

We've already seen that it doesn't usually win. While true, this isn't a very interesting observation if we're trying to figure out the best way to make predictions. We can't know a given year's winner ahead of time.

But we can choose experts in various ways. Surely, they can beat a naive Consensus that includes the effects of ballots from small children and others who may get scores down in the single digits.

For the first expert panel, I picked those with the top five highest average scores from among entrants in at least four of the first five contests. I then took the average of those five during each year and penciled it in as the result of the expert panel. It would have been interesting to also see the Consensus of that panel but that would require reworking the original raw data from the ballots themselves. Because of how the process works, my guess that that this would be higher than the panel mean but probably not much higher.

For the second panel, I just took the people with the 25 highest scores from among those who had entered the contest for at least 20 years. This is a bit of a cheat in that, unlike the first panel, it's retrospective--that is, it requires you in 1987 to know who is going to have the best track record by the time 2012 rolls around. However, as it turns out, the two panels post almost exactly the same scores. So, there doesn't seem much point in overly fussing with the panel composition. Whatever I do, even if it involves some prescience, ends up at about the same place.

So now we have a couple of panels of proven experts. How did they do? Not bad.

But they didn't beat Consensus.

To be sure, the trend lines do seem to be getting closer over time. I suspect, apropos the earlier discussion about trends over time, we're seeing that carefully-considered predictions are increasingly informed by the general online wisdom. The result is that Consensus in the contest starts to closely parallel the wisdom of the Internet because that's the source so many people entering the contest use. And those people who do the best in the contest over time? They lean heavily on the same sources of information too. There's increasingly a sort of universal meta-consensus from which no one seriously trying to optimize their score can afford to stray too far.

It's hard to prove any of this though. (And the first few years of the contest are something of an outlier compared to most of the 1990s. While I can imagine various things, no particularly good theory comes to mind.)

Let me just throw out one last morsel of data. Even if we retrospectively pick the most successful contest entrants over time, Consensus still comes out on top. Against a Consensus average of 30.5 over the life of the contest, the best >20 year contestant scored 28.4. If we broaden the population to include those who have entered the contest for at least 5 years, one person scored a 31--but this over the last nine year period when Consensus averaged 33.

In short, it's impossible to even beat Consensus consistently by matching it against a person or persons who we know with the benefit of hindsight to be the very best at predicting the winners. We might improve results by taking the consensus of a subset of contestants with a proven track record. It's possible that experts coming up with answers cooperatively would improve results as well. But even the simplest and uncontrolled Consensus does darn well.

This presentation from Yahoo Research goes into a fair amount of depth about different approaches to crowdsourced predictions as this sort of technique is trendily called these days. It seems to be quite an effective technique for certain types of predictions. When Steve Meretzky, who provided me with this data, and I were in MIT's Lecture Series Committee, the group had a contest each term to guess attendance at our movies. (Despite the name, LSC was and is primarily a film group.) There too, the consensus prediction consistently scored well.

I'd be interested in better understanding when this technique works well and when it doesn't. Presumably, a critical mass of the pool making the prediction needs some insight into the question at hand, whether based on their own personal knowledge or by aggregating information from elsewhere. If everyone in the pool is just guessing randomly, the consensus of those results isn't going to magically add new information. And, of course, there are going to be many situations where data-driven decisions are going to beat human intuition, however it's aggregated.

But we do know that Consensus is extremely effective for at least certain types of prediction. Of which this is a good example.

Subscribe to:

Posts (Atom)